![]()

1. Fetch your data

Setting

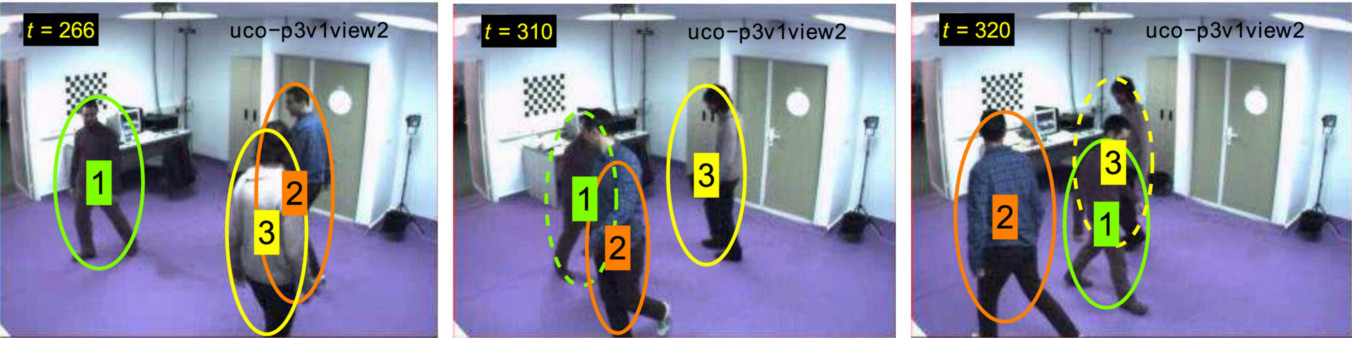

In this setting, people move around and interact in a public space and we want to identify complex rule activities, such as people walking together, fighting or meeting.Data

The data on which we build our identification is a set of recognized elementary (short-term) behaviors, such as a person running, moving abruptly or a person standing still.

The data were provided from the benchmark CAVIAR dataset.

Example

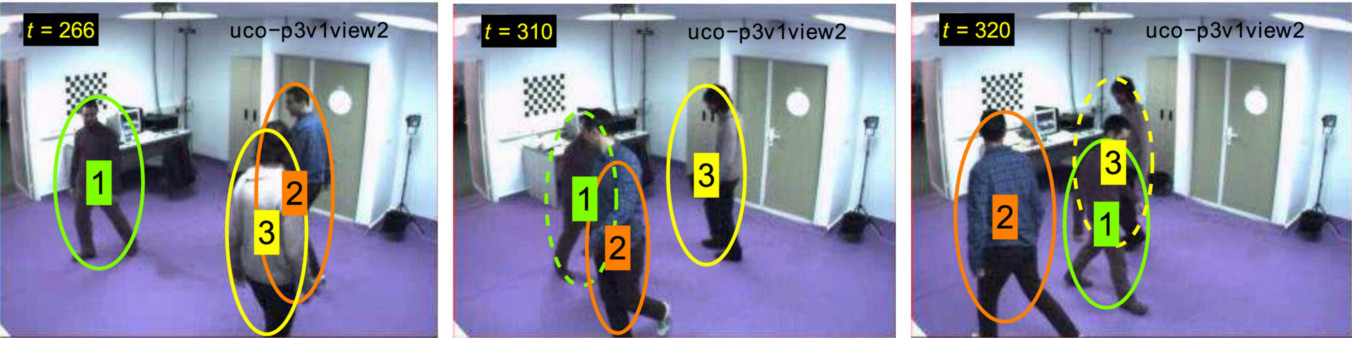

For our scenario we gather data about two persons (George and Alex) for specific moments in time, indicated by numeric timestamps. For each timestamp we hold the current person action status and the position in the space. Below we describe the gathered data both in everyday language and in the corresponding formal representation.

![]()

2. Describe your target language

Description

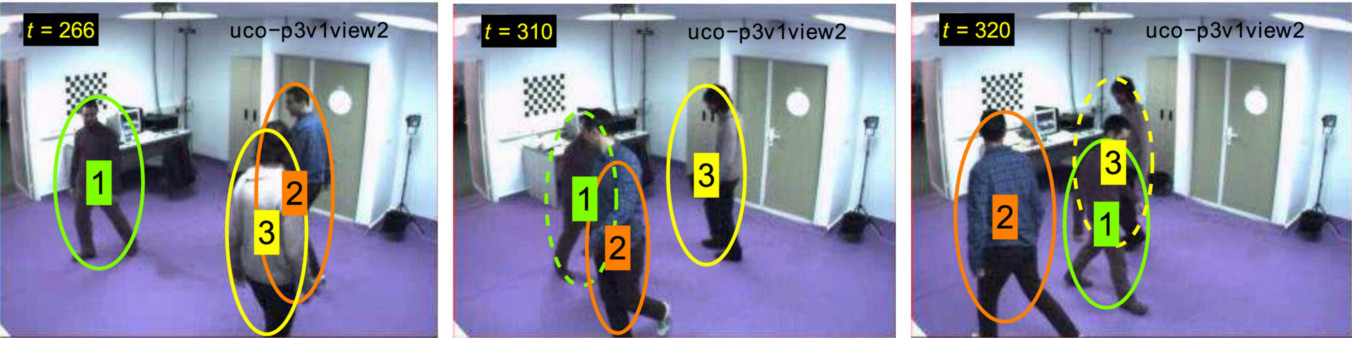

ILED learns a set of logical rules from data. To facilitate learning, it is useful to provide a general description of how these rules should look like, for instance, which predicates should be placed at the heads/bodies of the rules, which are the types of each variable appearing in a rule etc. Formally, ILED uses mode declarations (see here for more info).Example

In activity recognition we would like to learn definitions of when two persons are fighting, based on their behaviours and their distance. Using mode declaration, this target language can be specified as below:

head predicate symbol).

The argument inside head(.)

in this declaration is a template for

creating such terms, which should have

the form initiatedAt(fighting(X,Y),Z).

Here X, Y

and Z are variables

(this is indicated by the corresponding + symbol in the template)

of types person,

person and time respectively.

terminatedAt(fighting(X,Y),Z)

body predicate

symbol). The argument inside

body(.) is a template for terms

of the form holdsAt(close(X,Y,35),Z).

Here X,

Y and

Z are variables

(as indicated by the +

symbol in the template) of types

person,

person and

time respectively,

while 35 is a

domain constant (as indicated by the

# symbol in

the template) of type

distance.

happensAt(behavior(X,abrupt),Y)

(here X and Y are variables and

abrupt is a domain constant), or

happensAt(behavior(X,active),Y).

![]()

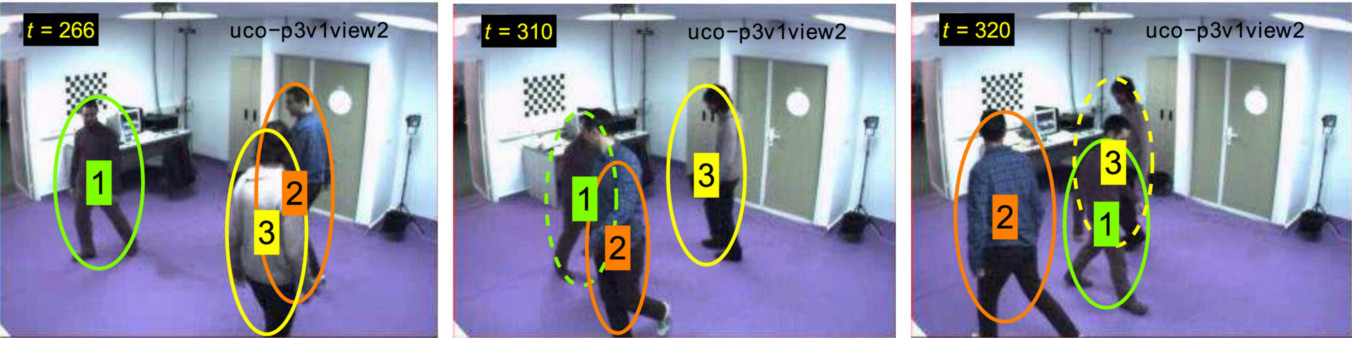

3. ILED Perform learning

![]()

4. See results

Learnt rules

initiatedAt(fighting(X,Y),T) :-

happensAt(behavior(X,abrupt),T),

holdsAt(close(X,Y,25),T).

terminatedAt(fighting(X,Y),T) :-

happensAt(behavior(X,running),T),

not holdsAt(close(X,Y,30),T).

Explanation

The first rule states that fighting between

two persons is initiated if one of them is

moving abruptly and their euclidean distance

is less than 25.

The second rules states that

fighting between two persons is terminated

when one of them is running and their euclidean

distance is more than 30.